| |

August

2013 - Volume 7, Issue 4

Knowledge and

perception regarding Objective Structured Clinical Examination

(OSCE) and Impact of OSCE workshop on Nurse Educators

|

( (

|

Firdous

Jahan (1)

Mark Norrish (2)

Gina Lim (3)

Othman Vicente (4)

Gracita Ignacio (5)

Aisha Al-Shibli (6)

Khadija Al-Marshudi (7)

(1) Dr Firdous Jahan,

Associate Professor/ Head of the Department, Family

Medicine Oman Medical College.

(2) Dr Mark Norrish, Associate Professor and Head of

Department of Social and Behavioral Medicine, Oman Medical

College Sohar

(3) Dr Gina Lim, Tutor, North Batinah Nursing institute

Sohar Hospital

(4) Mr. Othman Vicente, Senior. Trainer, North Batinah

Nursing institute Sohar Hospital

(5) Mrs. Gracita Ignacio ,Senior Trainer, North Batinah

Nursing institute Sohar Hospital

(6) Mrs. Aisha Al-Shibli, Senior trainer, North Batinah

Nursing institute Sohar Hospital

(7) Mrs. Khadija Al-Marshudi, Traine,r North Batinah

Nursing institute Sohar Hospital

Correspondence:

Dr Firdous Jahan, Associate

Professor/ Head of the Department,

Family Medicine Oman Medical College.

Email:

firdous@omc.edu.om

|

|

|

Abstract

Objective: To evaluate the knowledge and perception

of nurse educators regarding objective structured clinical

examination ( OSCE) construction and self-assessment

of skills implementing OSCE in a workshop. Impact of

OSCE workshop on Nurse educators.

Background: Assessment is a powerful driver of

student learning: it gives a message to learners about

what they should be learning, what the learning organization

believes to be important, and how they should go about

learning. OSCE is a practical test to assess specific

clinical skills. It is a well established method of

assessing clinical competence.

Method: A cross sectional descriptive study designed

to evaluate the knowledge and perception of nursing

educators regarding OSCE at OSCE workshop at North Batinah

Nursing Institutes Sohar Hospital Oman. The participants

were given reading material and participated in interactive

hands-on exercises. Performance was determined by direct

observation of participants performing a mock OSCE.

Pretests and posttests were conducted to assess change

in knowledge.

Result: The participants demonstrated a significant

improvement in their mean score of the posttest in comparison

to pretest. The participants highly evaluated the workshop

and were positive in their future ability to conduct

an OSCE.

Conclusion: Adopting an interactive hands-on

workshop to train the nursing educators is feasible

and appears to be effective. The feedback from participants

in the workshop was overwhelmingly positive. This workshop

has changed some of the perception of nurse educators

regarding the uniformity of OSCE scenarios, teaching

audit, demonstration of emergency skills and whether

they are time consuming to construct and administer.

Key words: knowledge and perception, OSCE, Nursing

educators, self-assessment

|

Background/Introduction:

Assessment is an integral part of the health care profession.

Clinical examination skills are the bridge between the patient's

history and the investigations required to make a diagnosis:

an 'adjunct to careful, technology-led investigations'[1].

The objective structured clinical examination (OSCE) has become

a standard method of assessment in both undergraduate and

postgraduate students[2]. OSCE is a practical test to assess

specific clinical skills, a well-established method of assessing

clinical competence. The OSCE was first introduced in medical

education in 1975 by Ronald Harden at the University of Dundee[3-4].

The aim of the OSCE was to assess clinical skills performance.

Currently, the OSCE assessments, the administration, logistics

and practicalities of running an OSCE are more expensive than

traditional examinations[5]. However, this must be set against

their reliability, which is far superior to the traditional

short case, a versatile multipurpose evaluative tool that

can be utilized to assess health care professionals in a clinical

setting. It assesses competency, based on objective testing

through direct observation[6].

The OSCE style of clinical assessment, given its obvious advantages,

especially in terms of objectivity, uniformity and versatility

of clinical scenarios that can be assessed, allows evaluation

of clinical students at varying levels of training within

a relatively short period, over a broad range of skills and

issues[7-8].

It is critical to improve faculty and preceptor's ability

to facilitate and assess learning and to apply instructional

design principles when creating learning and assessment tools,

help faculty to more objectively assess student performance

by implementing objective structured clinical examinations

(OSCE) and validated knowledge assessments[9-10].

This study was done on nursing educators who are participating

in the OSCE workshop, to evaluate their knowledge and perception

regarding OSCE and to assess pre and post work shop knowledge

enhancement with self-assessment.

Method

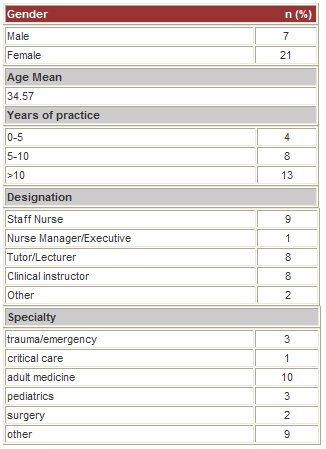

A cross sectional survey designed to evaluate the knowledge

and perception of nursing educators regarding OSCE at OSCE

workshop in North Batinah Nursing Institute at Sohar Hospital

Sultanate of Oman. All nurse educators from different regions

of Oman participating in the workshop and giving consent to

participate in study, were included in this study.

Data Collection: Data was collected through self-filled

questionnaire by the participant

which included demographic characteristics (age, gender, year

of practice) and questions regarding knowledge and

perception of OSCE construction as well as self-assessment

of their own performance. Principal Investigator ensured uniformity,

explained the questionnaire objectives to the participant

and obtained written consent before collecting the data. Survey

instrument was made after literature search was reviewed by

and agreed on via several brain storming sessions. Validation

of questionnaire on small group (pilot) was also done.

Ethical Considerations: This study proposal was approved

by Internal Review Board for ethics of Oman Medical College

as well as Ministry of Health Oman.

| |

Program

Activities |

Duration

|

| 1 |

Welcome

note, orientation and pre-test |

30 minutes

|

| 2 |

Overview

of the Training Workshop and its Objectives |

30 minutes

|

| 3 |

Historical

Background

Application of OSCE in the Foundation Course of Nursing |

30 minutes

|

| 4 |

Learners

understanding of OSCE |

30 minutes

|

| 5 |

Importance

of OSCE in Nursing education |

30 minutes

|

| 6 |

Constructing

stations and logistics |

1 hour

|

| 7 |

Test

the station: Preparation, training the simulator patient,

being a candidate and supervisor |

2 hours

|

| 8 |

Feedback

on stations: Get feedback from learner and give feedback

to candidate |

30 minutes

|

| 9 |

Evaluation

: Post -test and workshop evaluation |

30 minutes

|

Workshop description

This workshop was design to train the trainer in OSCE organization

and implementation. This workshop aimed to encourage constructive

dialogue between health professional educators in the use

of OSCE for student learning and assessment, creativity to

develop OSCE, encourage a liberation of minds, to meet the

challenges of developing and assessment of skills in health

professional education and ensures that all the examiners

have been prepared to the same standard and fully understand

their role. The main objectives were to understand the structure

of the OSCE, the roles of the supervisors and the students,

list the logistics of setting an OSCE, demonstrate how to

construct an OSCE station, and learn how to train a simulated

standardized patient.

The workshop emphasized interactive

sessions based on working out exercises and hands-on experience

in addition to core lecture presentation on OSCE process;

validity and reliability of the OSCE stations being used,

length of each OSCE station, the range of advanced clinical

skills being examined, clinical skills to discern whether

the nurse practitioner student can independently assess and

perform the task.

Workshop Structure:

The participants were given reading and an information website

regarding OSCE and its construction. The program was conducted

for a whole day.

After introducing the facilitators and providing a brief description

regarding the workshop content, the participants took a pretest.

The second activity for this day included Historical Background

Application of OSCE in the Foundation Course of Nursing followed

by an introduction to the structure, importance, validity

and reliability of the OSCE with learners' understanding and

importance in nursing education. The participants were given

a few OSCE cases to study before they started constructing

their own OSCE station of setting an OSCE, including the blueprint,

inventory, and venue preparation.

The participants were divided into groups of two or three.

Each group was asked to write a short case station that included

the information for students (aim, data, and task/s), the

scoring sheet for the supervisor and materials required/ the

scenario for the standardized patient. Each group shared the

prepared case with the others. All the participants commented

on the case and agreed on the final material. At the end of

the session, the participants prepared the blueprint and inventory

for the cases they constructed. These stations were used as

the hands-on experience of the OSCE.

The last segment was the demonstrating of what had been learned

and observed in previous sessions. The participants were involved

in preparing the stage for five OSCE stations. The participants

were divided into groups of two. Standardized patients were

available for each group to train. Then a real OSCE was performed.

Each member of the group acted alternatively as a supervisor

and as a student.

After that, participants met to receive feedback from the

standardized patients and to provide each other with feedback

regarding the process of OSCE from the perspectives of students

and supervisors as well as the possible amendments to be done

to the station write-ups.

The final activity was evaluating the workshop and completing

a posttest. During the evaluation session, the participants

were encouraged to report any development related to future

implementation of the OSCE in their universities.

Evaluation

Participants were asked to complete an anonymous satisfaction

survey. Participants elicited their opinion on a 5-point Likert

scale (1=Poor, 5=Excellent) to assess the quality of teaching

material, facilitators' knowledge and skills, value of hands-on

experience, quality of syllabus/handout, overall course evaluation,

and their ability to conduct an OSCE.

Data Analysis

Data were analyzed using the Statistical Package for Social

Science (SPSS version 18). The obtained data were coded, analyzed,

and tabulated. Descriptive analysis, including frequencies,

was performed, and the paired sample t test with a 95% confidence

interval was used to compare means and test for statistical

significance.

Result

The response rate was 75%, out of

36 participants 28 completed the pre and posttest with feedback.

Distribution of Response Pre and Posttest

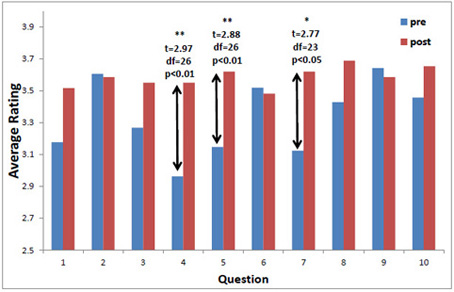

Paired sample t-tests were done on the 10 questions that were

asked to participants before and after the workshop.

While all the responses are generally favorable, with average

scores for all question (pre and post) being above the mid-likert

point, there were significant pre-post differences for 3 of

the questions.

It is clear that the views of participants on whether OSCE

scenarios are uniform (q4), whether they allow for teaching

audit and for demonstration of emergency skills (q5), and

whether they are time consuming to construct and administer

(q7) have changed as a result of this workshop. For all three

of these items there a significant increase in the level of

agreement following the workshop.

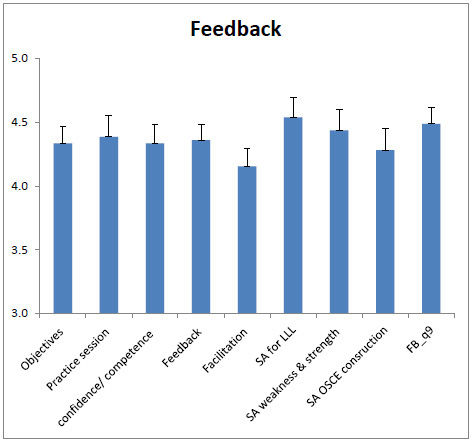

Feedback

The feedback from participants in the workshop was overwhelmingly

positive. With all median value either ‘agreeing’

or ‘strongly agreeing’. Figure 2 shows the average

of the feedback for each question (with standard error displayed).

| Feedback |

Strongly disagree

|

Disagree

|

Neither agree

nor disagree

|

Agree

|

Strongly Agree

|

Mode

|

| Objectives

of this workshop was clear and achieved |

0

|

2.5

|

7.5

|

42.5

|

45

|

Strongly Agree

|

| Quality/Practice

session with feedback helped in learning |

0

|

7.5

|

5

|

27.5

|

57.5

|

Strongly Agree

|

| This

workshop increased your level of confidence/ competence

in constructing OSCE |

0

|

2.5

|

12.5

|

32.5

|

50

|

Strongly Agree

|

| Feedback

was constructive for each group presentation |

0

|

2.5

|

2.5

|

50

|

42.5

|

Agree

|

| Facilitation

of work shop was appropriately done |

0

|

5

|

7.5

|

52.5

|

32.5

|

Agree

|

| Self-assessment

is important mechanism for lifelong learning and self-improvement |

2.5

|

2.5

|

0

|

27.5

|

65

|

Strongly Agree

|

| Self-Assessment

identifies your weakness and strength |

2.5

|

2.5

|

2.5

|

32.5

|

57.5

|

Strongly Agree

|

| Self-assessment

of skills in constructing OSCE |

2.5

|

2.5

|

7.5

|

37.5

|

47.5

|

Strongly Agree

|

| OVERALL |

0

|

0

|

10

|

30

|

57.5

|

Strongly Agree

|

Discussion

There is a growing international interest in teaching clinical

skills in nursing education. OSCE is a form of performance-based

testing used to measure candidates’ clinical competence.

It is designed to test clinical skill performance and competence

in skills such as communication, clinical examination, medical

procedures , prescription, and interpretation of results The

workshop aimed to encourage the nurse educators to construct

OSCE for student learning and assessment and to encourage

creativity to develop OSCE. Expert-delivered workshops improves

the ability to implement assessment approaches when compared

to self-study alone[11].

Our study group in the workshop has shown great enthusiasm

and improvement in knowledge. The context, educational tools,

and collective motivation to learn and suggested the approach

as a feasible and effective strategy for disseminating and

incorporating medical teaching.

Our study has shown a significant improvement in posttest

scores, signifying better knowledge about OSCE and its implementation.

Literature supports the utility of OSCE as a reliable tool

for assessment and this should be used as choice for clinical

skills assessment[12].

In the workshop trainers learnt how to construct the OSCE

and few concepts were cleared after the workshop. It is evident

that it should be applied carefully to get maximum benefit[13].

The participants exhibited a significant improvement in the

mean score of the posttest delivered at the end of the workshop

as compared to the mean of pretest. OSCE can be used as an

assessment tool for formative and summative assessment, as

a resource for learning, as a basis for abbreviated versions

of physical examination assessments and to identify gaps and

weaknesses in clinical skills [14-15]

There was significant knowledge improvement in posttest on

the question about the scenarios are uniform for all candidates,

OSCE allow for teaching audit and for demonstration of emergency

skills and OSCE is time consuming to construct and administer.

There are various evidence based information that multiple

and emergency skills can be evaluated in OSCE [16-17]. Many

studies have shown that trainer workshops are an effective

training tool for continued medical education among health

care professionals in several fields of education .This methodology

allowed the participants to apply the learned material through

discussion with the facilitators and granted them the opportunity

to ask questions for any further clarifications [18-19]. Our

workshop participants showed appropriate posttest knowledge,

some of the theme was cleared after the workshop. It is imperative

to train the trainer to get the maximum benefits and appropriate

health care delivery[20-21].

The overall evaluation of the workshop was over whelming positive.

The majority of participants rated all the items between agree

and strongly agree. Most of the nurse educators were confident

in quality and practice session which helped them learning,

applicability of the OSCE test in terms of planning, organizing,

and designing stations as a clinically useful new idea. In

the simulation the trainers were very excited and felt how

the simulators feel when they are examined by the students[22].

Their self-assessment was appropriate and honest and majority

agreed this is important for lifelong learning which identify

your strength and weaknesses[23].

In our workshop, the participants had the opportunity to demonstrate

their knowledge and skills by conducting a real OSCE. Benefit

was noted during the practical application of the training

and the ability to apply theoretical principles acquired early

on in the workshop. Workshop participants were positive concerning

their ability to conduct an OSCE in the future[24].

Besides providing learning skills and principles, clinical

educators need to develop sound evaluation of what they teach.

This workshop was conducted to help participants become trainers

who can effectively assess clinical skills of their students

and consequently single out any gaps in education. The positive

attitude toward adopting OSCE was observed and was reassuring

as more than two thirds were inclined to conduct the OSCE

in the future.

Conclusions

Implementing to train the nurse educators workshops may be

a feasible and effective way to enhance one’s knowledge

and skills in conducting OSCE. It would be reasonable to adopt

an interactive, hands-on, exercise-rich methodology to implement

such workshops, and our study serves as a guide in this respect.

We suggest conducting a follow-up workshop to explore barriers

and feedback from the participants’ implementation.

References

1. Miller G. The assessment of clinical skills/competence/performance.

Academic Medicine 1990;65(Suppl. 9):S63–S67.

2. Ahuja, J. OSCE: A Guide for Students, Part 1. Practice

Nurse2009, 37(1), 37 – 39.

3. Harden RM, Stevenson M, Downie WW, Wilson GM. Assessment

of clinical competence using objective structured examination.

Br Med J 1975 Feb;1(5955):447-451.

4. Harden RM, Gleeson FA. Assessment of clinical competence

using an objective structured clinical examination (OSCE).

Med Educ 1979 Jan;13(1):41-54.

5. Calman L, Watson R, Norman N, Redfern S, Murrels T. Assessing

practice of student nurses: methods, preparation of assessors

and student views. Journal of Advanced Nursing. 2002;38:516–523

6. Wass V, van der Vleuten C, Shatzer J, Jones R. Assessment

of clinical competence. The Lancet 2001;357:945–949.

7. Bujack L, McMillan M, Dwyer J, Hazleton M. Assessing comprehensive

nursing performance: the objective structured clinical assessment

(OSCA). Part 1: development of the Assessment Strategy. Nurse

Education Today 1991;11:179–184

8. Bujack L, McMillan M, Dwyer J, Hazleton M. Assessing comprehensive

nursing performance: the objective structured clinical assessment

(OSCA). Part 2: report of the Evaluation Project. Nurse Education

Today 1991;11:248–255

9. Kurz, J.M., Mohoney, K., Plank, L.M., & Lidicker, J.

Objective Structured Clinical Examination And advanced Practice

Nursing Students. Journal of Professional Nursing 2009; 25(3):186-191.

10. Bell-Scriber MJ, Morton AM. Clinical instruction: train

the trainer. Nurse Educ 2009; 34(2):84-7.

11. Harden RM. Twelve tips for organizing an Objective Structured

Clinical Examination (OSCE). Med Teach 1990;12(3-4):259-264

12. Marliyya Zayyan. Objective Structured Clinical Examination:

The Assessment of Choice. Oman Med J. 2011 July; 26(4): 219–222.

13. Helen E. Rushforth. Objective structured clinical examination

(OSCE): Review of literature and implications for nursing

education. Nurse Education Today July 2007 ; 27( 5):481-490.

14. Khattab Ahamed D, Rawlings Barry. Assessing nurse practitioner

students using a modified objective structured clinical examination

(OSCE) Nurse Education Today October 2001; 21( 7): 541-550.

15. Ross M, Carroll G, Knight J, Chamberlain M, Fothergill-Bourbonnais

F, Linton J. Using the OSCE to measure clinical skills performance

in nursing. Journal of Advanced Nursing. 1988;13:45–56.

16. Bartfay W, Rombough R, Howse E, Leblanc R. The OSCE approach

in nursing education. Canadian Nurse. 2004;100(3):18–23

17. Borbasi S, Koop A. The objective structured clinical examination:

its application in nursing education. The Australian Journal

of Advanced Nursing 1993;11(2):33–40.

18. Lara H,El-Khoury, Basem S, Umayya M, Jumana A. Impact

of a 3 days OSCE workshop on Iraqi Physicians. Family Medicine

Oct 2012;44(9):627-632.

19. Tanabe P, Stevenson A, Decastro L, et al. Evaluation of

a train-the-trainer workshop on sickle cell disease for ED

providers. J Emerg Nurs 2011;Sept 19.

20. Newble D. Techniques for measuring clinical competence:

objective structured clinical examinations. Medical Education.

2004;38:199–203

21. Levine SA, Brett B, Robinson BE, et al. Practicing physician

education in geriatrics: lessons learned from a train-the-trainer

model. J Am Geriatr Soc 2007;55(8):1281-6.

22. Alinier G. Nursing students’ and lecturers’

perspectives of objective structured clinical examination

incorporating simulation. Nurse Education Today. 2003;23:419–426.Hodges

B. OSCE! Variations on a theme by Harden. Med Educ 2003. Dec;37(12):1134-1140.

23. Eva KW, Regehr G. Knowing when to look it up: A new conception

of self-assessment ability. Academic Medicine. 2007; 82(10

suppl):S81-S84.

24. Mattheos N, Nattestad A, Falk-Nilsson E, Attström

R. The interactive examination: Assessing students’ self-assessment

ability. Medical Education. 2004;38(4):378-389.

25. Roberts J, Brown B. Testing the OSCE: a reliable measurement

of clinical nursing skills. The Canadian Journal of Nursing

Research 1990;22(1):51–59

26. Cazzell M, Rodriguez A. Qualitative analysis of student

beliefs and attitudes after an objective structured clinical

evaluation: implications for affective domain learning in

undergraduate nursing education. J Nurs Educ. 2011 Dec;50(12):711-4.

27. Watson,R, Stimpson, Topping, A, Porock, D.. Clinical Competence

assessment in Nursing: A System review of the literature.

Integrative Literature Reviews and Meta-Analyses 2002; 39(5),

421 – 431

|

|